Today a story to remind us to be careful of what we read into data. It also serves as a reminder that “you cannot eliminate your biases” (an article I wrote some time ago on the topic of heuristics) and therefore how vitally important it is to develop strategies to “Avoid Groupthink” so that you don’t draw the wrong conclusions from data. BTW, if you do not already have DEI (Diversity, Equity, Inclusion) as one of your very top priorities for your business (and please be honest with yourself, is it a “must-have” or a “nice to have” for you at the moment?), then consider that diversity of thought is a key strategy to reduce business risk from drawing the wrong conclusions from your data. If you all look and sound the same, come from similar backgrounds, chances are you will think along the same lines and so your biases will prevent you from seeing what you cannot see.

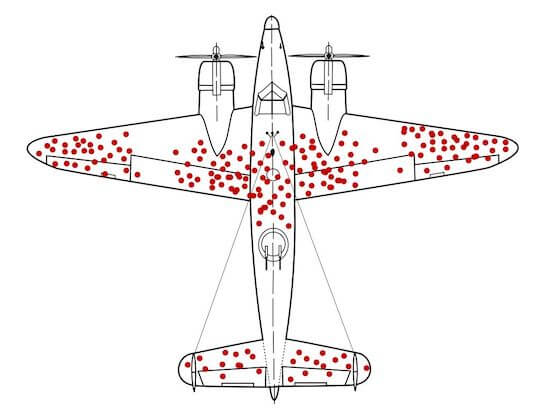

So, let’s start with what you see in this picture that draws data from where damage from anti-aircraft fire occurred in fighter aircraft returning back to base after sorties in World War II.

What do you see? From that, what conclusions would you draw?

Now take a look at the story behind the picture, including the reference to yet another heuristic, “survivor bias”:

When data gives the wrong solution

During World War II, researchers at the Center for Naval Analysis faced a critical problem. Many bombers were getting shot down on runs over Germany. The naval researchers knew they needed hard data to solve this problem and went to work. After each mission, the bullet holes and damage from each bomber was painstakingly reviewed and recorded. The researchers poured over the data looking for vulnerabilities.

The data began to show a clear pattern (see picture). Most damage was to the wings and body of the plane.

The solution to their problem was clear. Increase the armour on the plane’s wings and body. But there was a problem. The analysis was completely wrong.

Before the planes were modified, a Hungarian-Jewish statistician named Abraham Wald reviewed the data. Wald had fled Nazi-occupied Austria and worked in New York with other academics to help the war effort.

Wald’s review pointed out a critical flaw in the analysis. The researchers had only looked at bombers who’d returned to base.

Missing from the data? Every plane that had been shot down.

But the research wasn’t a wasted effort. These surviving bombers rarely had damage in the cockpit, engine, and parts of the tail. This wasn’t because of superior protection to those areas. In fact, these were the most vulnerable areas on the entire plane. The researchers’ bullet hole data had created a map of the exact places that the bomber could be shot and still survive.

With the new analysis in hand, crews reinforced the bombers’ cockpit, engines, and tail armour. The result was fewer fatalities and greater success of bombing missions. This analysis proved to be so useful that it continued to influence military plane design up through the Vietnam war.

This story is a vivid example of survivor bias. Survivor bias is when we only look at the data of those who succeed and exclude those who fail.

Survivor bias is all around us, especially in the media. You read articles about entrepreneurs who risked everything financially and are now a success. But no one profiles the hundred other entrepreneurs who followed the same strategy and went bankrupt.

Or consider the business classic, Good to Great, which profiled successful companies and the characteristics that made them “great.” But what about all the companies that failed but also had “Level 5 Leaders” and “the right people on the bus”? The analysis excludes these companies “missing from the data”.

The takeaway: When solving a problem, ask yourself if you’re only looking at the ‘survivors.’ Your solution might not be in what is there, but what is missing.

From a post by Trevor Bragdon, itself inspired by the story teling of Matthew Syed in “Black Box Thinking“. Also, I highly recommend “Sideways” the podcast from Matthew Syed