The best teams make more mistakes.

The best teams make more mistakes.

Yes. Counter-intuitive but true.

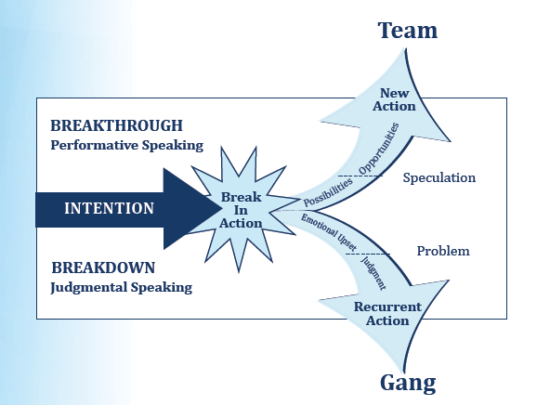

An earlier post on this site is: “To have fewer conflicts, disagree more” and focussed on the tool in the image above, called the Breakdown Recovery Model. I learned this model from Travis Carson of Market Force over a decade ago when we roomed together at a global partnership retreat, keeping him up until after 2 a.m. as I absorbed his teaching like a sponge. Market Force is all about understanding ourselves as individuals and then applying that to creating high-performing teams.

As an illustration of how powerful this is, one of the early clients I taught this to was the COO of one of the largest offshore financial services firms in Cayman. Pretty soon. this image was put up by the computer monitor of many in their business. Fast forward ten years and that person has been successfully leading a consulting business of their own for some time, with Market Force at the heart of it, and has now taught the principles to thousands in many countries.

Powerful stuff and the core message is worth repeating and in different ways. Today, then, I give you a piece written recently by Tim Harford, you will see the same message in there.

Perhaps you might look to apply it to your own leadership and your own business?

The art of making good mistakes

12 October 2023, by Tim Harford

Do good teams make fewer mistakes? It seems a reasonable hypothesis. But in the early 1990s, when a young researcher looked at evidence from medical teams at two Massachusetts hospitals, the numbers told her a completely different story: the teams who displayed the best teamwork were the ones making the most mistakes. What on earth was going on?

The researcher’s name was Amy Edmondson and, 30 years after that original puzzle, her new book Right Kind of Wrong unpicks a morass of confusion, contradiction and glib happy talk about the joys of failure.

She solved the puzzle soon enough. The best teams didn’t make more errors; they admitted more to making errors. Dysfunctional teams admitted to very few, for the simple reason that nobody on those teams felt safe owning up.

The timeworn euphemism for a screw-up is a “learning experience”, but Edmondson’s story points to a broad truth about that cliché: neither organisations nor people can learn from their mistakes if they deny that the mistakes ever happened.

Such denial is common enough, particularly at an organisational level, and for the obvious backside-covering reasons. But it can be easy to overlook the implications. For example, Edmondson recalls a meeting with executives from a financial services company in April 2020. With hospitals across the world overwhelmed by Covid-sufferers in acute respiratory distress, and many economies in lockdown, they told Edmondson that their attitude to failure had changed. Normally, they explained, they were enthusiastic about sensible risk-taking and felt it was OK to fail if you learnt from that failure. Not during a pandemic, however. They had decided that failure was temporarily “off-limits”.

What nonsense. The moment that Covid turned the world upside-down was exactly the time to take calculated risks and learn quickly, not to mention a time when failures would be inevitable. Demanding perfection against such a backdrop guaranteed ponderousness and denial.

It can be wise to aim for perfection, explains Edmondson, but not without laying the groundwork for people to feel safe in admitting mistakes or in reporting mistakes from others. For example, when Paul O’Neill became the boss of the US aluminium company Alcoa in 1987, he set the apparently unachievable target of zero workplace injuries. That target lifted the financial performance of Alcoa because it helped to instil a highly profitable focus on detail and quality.

The case is celebrated in business books. But it would surely have backfired had O’Neill not written to every worker, giving them his personal phone number and asking them to call him if there were any safety violations.

Another famous example is Toyota’s Andon Cord: any production line worker can tug the cord above their workstation if they see signs of a problem. (Contrary to myth, the cord does not immediately halt the production line, but it does trigger an urgent huddle to discuss the problem. The line stops if the issue isn’t resolved within a minute or so.) The Andon Cord is a physical representation of Toyota’s commitment to listen to production-line workers. We want to hear from you, it says.

Creating this sense of psychological safety around reporting mistakes is essential, but it is not the only ingredient of an intelligent response to failure. Another is the data to discern the difference between help and harm. In the history of medicine, such data has usually been missing. Many people recover from their ailments even with inept care, while others die despite receiving the best treatment. And since every case is different, the only sure way to decide whether a treatment is effective is to run a large and suitably controlled experiment.

This idea is so simple that a prehistoric civilisation could have used it, but it didn’t take off until after the second world war. As Druin Burch explains in Taking the Medicine, scholars and doctors groped around for centuries without ever quite seizing upon it. A thousand years ago, Chinese scholars ran a controlled trial of ginseng, with two runners each running a mile: “The one without the ginseng developed severe shortness of breath, while the one who took the ginseng breathed evenly and smoothly.” With 200 runners they might have learnt something; comparing a pair, the experiment was useless.

The Baghdad-based scholar Abu Bakr al-Razi tried a clinical trial even earlier, in the 10th century, but succeeded only in convincing himself that bloodletting cured meningitis. One plausible explanation for his error is that he didn’t randomly assign patients to the treatment and control group but chose those he felt most likely to benefit.

In the end, the idea of a properly randomised controlled trial was formalised as late as 1923, and the first such clinical trials did not occur until the 1940s. As a result, doctors made mistake after mistake for centuries, without having the analytical tool available to learn from those errors.

Nearly 2,000 years ago, the classical physician Galen pronounced that he had a treatment which cured everyone “in a short time, except those whom it does not help, who all die . . . it fails only in incurable cases”. Laughable. But how many decisions in business or politics today are justified on much the same basis?

A culture in which we learn from failure requires both an atmosphere in which people can speak out, and an analytical framework that can discern the difference between what works and what doesn’t. Similar principles apply to individuals. We need to keep an open mind to the possibilities of our own errors, actively seek out feedback for improvement, and measure progress and performance where feasible. We must be unafraid to admit mistakes and to commit to improve in the future.

That is simple advice to prescribe. It’s not so easy to swallow.